Artificial intelligence (AI) has become the central force that is advancing in various technological fields, from healthcare to security and now emotion recognition. The AI experts are working day and night to improve the algorithms based on Natural Language Processing (NLP). Today, AI emotion recognition is more important than ever before. We see its various applications emerging. This makes the application stronger and more capable of recognizing emotions and helping humans in fields like robotics, home automation, mobile applications, health care, and chatting apps.

Development in this sector will greatly help AI and machine learning to analyze and respond to human emotions accordingly in given circumstances. This is a breakthrough that will further refine machine and human interaction and make them more personalized based on emotions. Mobile applications have become more advanced. They now use advanced AI models to understand human emotions via text, facial expressions, voice analysis, and contextual cues.

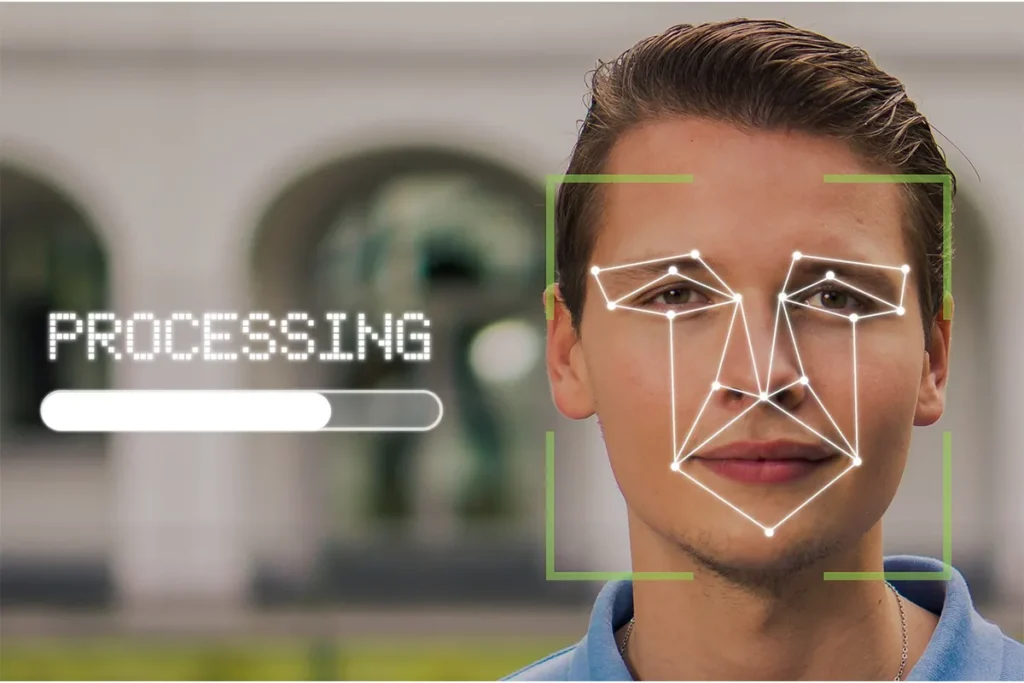

How AI Facial Emotion Recognition Works

Emotion AI is a technology that is changing quickly. This technology helps machines understand and respond to human feelings. It uses advanced algorithms and processes data in complex ways. This allows it to be used in mental health, customer service, and gaming. We will now explore how this technology works. We will look at its main parts, tools, and the processes behind emotion recognition.

- Machine Learning Algorithms: Machine learning algorithms help AI facial emotion recognition. They use methods such as deep learning and neural networks. These methods look at facial features and sort emotions. The algorithms learn from large sets of data. These data sets have images of faces that show different emotions. This helps the models find patterns. This lets them make good guesses about new data they have not seen before.

- Data Collection and Training Processes: The success of emotion recognition systems depends on good data collection and training. It is important to have high-quality data sets. These data sets should have different images of faces showing different emotions. These data sets train the algorithms. This allows the algorithms to perform well under different conditions. These conditions can include lighting, angles, and unique facial expressions.

- Facial Feature Detection: Facial feature emotion detection is about finding important parts of the face. These parts include the eyes, nose, and mouth. Convolutional Neural Networks (CNNs) are often used for this detection. It is important to find these features correctly. Small changes in facial expressions can mean different emotions.

- Emotion Classification: After detecting facial features, the next step is to classify emotions. This step puts the emotions into groups. The groups can include happiness, sadness, anger, and surprise. Classification algorithms check the features that were found. They determine which emotion is most likely being shown. They often use supervised learning methods for this task.

AI facial emotion recognition systems use different tools and software to help in development and deployment. OpenCV is a popular library for image processing. TensorFlow and PyTorch are also common for building and training deep learning models. These tools give developers the functions needed to create strong emotion recognition applications. They are important in the tech world today.

AI Facial Emotion Recognition Applications

Facial emotion recognition technology can be used in many industries. It changes how we interact with machines and helps us understand human emotions better. This technology uses smart algorithms to look at facial expressions and other non-verbal signals. This allows for better interactions in healthcare, marketing, security, gaming, and mobile chat apps. By identifying emotional states correctly, AI can improve communication and make experiences more personal. It can also create more interesting environments that enrich lives and help build deeper connections in both digital and real-life situations.

1. Monitoring Patient’s Emotions: AI facial emotion recognition can help improve mental health care. It provides tools to watch patients’ emotions over time. By looking at small changes in facial expressions, healthcare providers can find symptoms of anxiety, depression, or stress. They can do this even if patients do not show their feelings openly. This constant watching can lead to quick help. It allows for more personal and effective treatment plans that meet patient needs.

2. Enhancing Patient-Physician Communication: This technology also helps communication between patients and doctors. By understanding patients’ emotions during visits, healthcare providers can change their approach. They can create a more caring and supportive atmosphere. This ability improves the quality of care. It also builds trust and friendship between patients and their healthcare workers. Better communication leads to better health results.

3. Assessing Consumer Reactions: AI emotion recognition gives important ideas about how consumers react to products and ads. Companies can look at facial expressions during marketing campaigns. They can understand emotional responses better this way. This helps them see which parts connect well with their audience. Using this information helps improve marketing strategies and makes campaigns more effective.

4. Personalizing Marketing Strategies: Also, businesses can use emotional data to make marketing more personal. They can change messages to fit what consumers feel at the moment. This kind of personalization makes consumers more interested. It also helps build brand loyalty. Customers feel that the brand understands them. This feeling can lead to higher sales and conversion rates.

5. Identifying Threats and Suspicious Behavior: AI facial emotion recognition helps improve security. It can identify potential threats by looking at emotional signs. Surveillance systems that use this technology can recognize signs of agitation or distress in people. This ability lets officials take action before problems arise.

6. Enhancing Public Safety: Using AI in our daily lives and public places improves safety. Authorities can watch crowd emotions more effectively. This skill helps them spot possible problems. It allows officials to act quickly to stop issues during big events. This way, public safety is secured.

7. Creating Immersive Experiences: In gaming, AI emotion recognition improves player experiences. It can change the game environment based on how players feel. This ability creates more immersive experiences. The game reacts to players’ emotions, making the interactions more real and exciting.

8. Adaptive Storytelling Based on Player Emotions: Moreover, emotion recognition allows for changes in storytelling. Games can modify stories and character interactions based on players’ feelings. This personalization makes a special gaming experience. It keeps players interested. It also improves their enjoyment and satisfaction.

9. Mobile Chatting Apps: Mobile chatting apps use AI to recognize facial emotions. This technology can check users’ faces. It gives real-time emotional feedback during chats. The app changes its answers based on the emotions it sees. Emotionally intelligent chatbots create better and more helpful interactions. The apps help build a stronger emotional connection. They give personalized support. These apps can make users feel happier and improve mental well-being online.

These apps show how AI facial emotion recognition impacts different areas. It highlights how it can improve human experiences and interactions. Research articles and industry analyses give more information on these topics.

Ethical Aspects of AI Emotion Recognition

AI facial emotion recognition technology is becoming more common in society. It raises important ethical concerns about privacy. Many people do not know that their faces are being watched and recorded. This can make them feel like they are always being monitored. This constant watching can cause big privacy problems. This is especially true when data gets lost or used incorrectly. It shows that we need strong privacy protections.

Informed consent is very important, too, in AI ethics. It must be looked at when emotion recognition systems are used. Many apps collect biometric data. They do not ask for permission from the users. This raises ethical questions about these practices. Rules say consent is important, but enforcing them is not always strong. Users often do not know how their data will be used. It is important to have clear rules for consent to keep data collection ethical.

Finally, there is a big risk when AI facial emotion recognition is used for surveillance. It can be a serious threat to people’s freedoms.

Challenges of AI Emotion Recognition

AI facial emotion recognition technology has many challenges. Here arises a question: can AI develop emotions? These challenges can impact how well the technology works and how people accept it. People can divide these challenges into two groups. One group is technical problems, such as accuracy and bias. The other group is societal problems, including public trust and rules. It is important to understand these challenges in order to develop and use this technology responsibly.

- Accuracy and Bias in Emotion Recognition: AI systems often do not accurately recognize emotions. This happens because of biases in the training data. These biases can cause mistakes in identifying emotions, especially for diverse groups of people. Moreover, the algorithms can make errors when they try to read subtle or complex emotions. This results in unreliable outputs. These outputs can affect user experience and the technology’s effectiveness in important situations.

- Cultural Differences in Emotional Expression: Emotional expressions can be very different from one culture to another. This makes it hard to create a system that works for everyone. The idea that facial expressions show the same emotions everywhere is often wrong. This can cause misunderstandings and mistakes in emotion recognition. Thus, these systems might struggle to understand expressions from people with different cultural backgrounds. It is necessary to use culturally aware methods to make the technology more reliable.

- Public Trust and Acceptance: AI facial emotion recognition technologies need public trust to be successful. People worry about their privacy, the safety of data, and how the technology might be misused. These concerns can stop people from accepting the technology. Users often worry about how their emotional data might be used. They feel especially concerned in sensitive areas like healthcare or security. This concern makes them hesitant to use these technologies.

- Regulatory Hurdles: The rules about AI and facial recognition technologies are still changing. This creates uncertainty for businesses and developers. A lack of clear guidelines can slow down innovation. Companies might hesitate to invest in technologies that could have future rules. It is important to find a balance between promoting new technologies and protecting individual rights. This is essential for using emotion recognition systems responsibly.

These challenges show that we need more research and ethical thinking. We also need strong rules to ensure the proper use of AI facial emotion recognition technology.

Future Trends

The future of AI facial emotion recognition (FER) technology is ready for big improvements. These improvements will make it better and expand its uses in many fields. Researchers are moving past the basic emotions to create systems that can understand more types of emotions. This change helps technologies capture small details in human expressions. It makes interactions between humans and computers more natural and caring.

The facial emotion recognition industry is likely to grow a lot in the coming years. This growth will happen because of better AI and a rising need for emotional intelligence in technology. As different businesses like healthcare, marketing, and security see the benefits of emotion recognition, the market will probably double in size. This growth shows how important it is to understand the emotional context for better user experiences and engagement.

New ways to use FER technology are appearing. For example, AI avatars could respond quickly to users’ emotions. This would create more engaging experiences in virtual settings. Furthermore, businesses are looking at how emotion recognition can help with marketing. This would allow for personalized experiences for customers. These trends show how facial emotion recognition can change our interactions. This technology can help make our communication with machines more caring and understanding.

Conclusion

AI facial emotion recognition technology changes how we talk to machines. It makes communication easier and more empathetic in different areas. In healthcare, it can help improve patient care. In marketing, it can help make campaigns more personal. This technology can read human emotions well. It opens many new possibilities. As it improves, we can use it in mobile apps and our daily lives. This can help us connect better and improve user experiences. By using new AI advancements, businesses and developers can make systems that understand both words and emotions. This leads to deeper interactions.

However, when we use AI to understand emotions, we must think about ethical issues. There are important problems like privacy, consent, and biases. We must handle these problems carefully. This will help us use technology responsibly. Tech developers and policymakers must work together. They can create rules that improve the use of AI facial emotion recognition. This can help keep the good and reduce the risks. By focusing on ethical ways of using AI and building public trust, we can use this technology to improve our interactions. This can make the digital world more caring.